I have always felt that the displayed facial expressions should reflect the verb most recently executed by the interlocutor. But I just realized that there are two other factors that deserve consideration: mood and perception. If the other actor is angry with you, it should definitely show up on their face. That is certainly more important than any expression intrinsic to the verb.

Should that facial expression reflect the current mood of the actor, the current pValue of the actor, or the sum of the two? I would be willing to do it as the sum of the two.

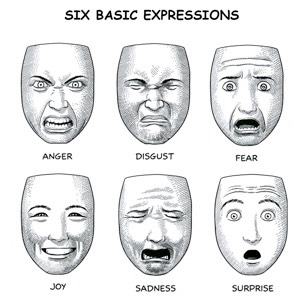

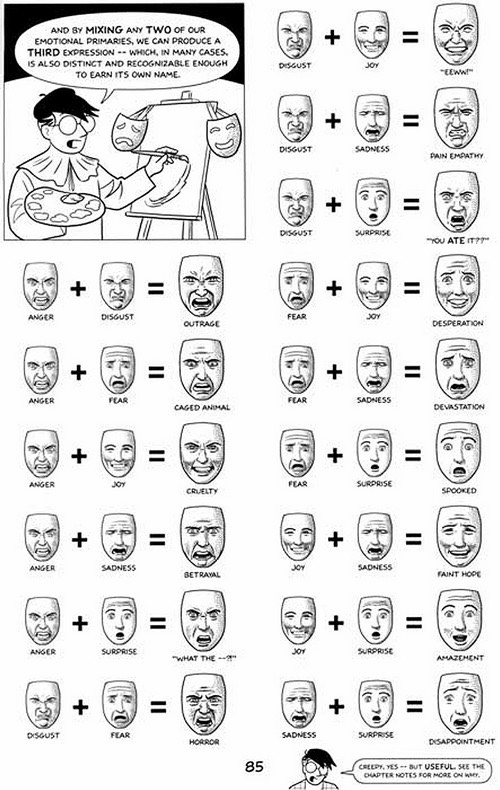

But there’s another problem: how do we show all three dimensions (good, honest, powerful)? Scott McCloud’s book, Making Comics has a good idea: combine the six basic expressions to get a final expression:

I wonder if this could be the basis for a better facial display system? We would need only specify the six basic emotions, then combine them (average the two values for each data point) to obtain one of the 36 extended emotions.

I wonder if we could also specify the intensity of each emotion by scaling the deviation of the position of a data point from the null face version to the emotion face version? If so, this would permit us to specify things like “75% joy + 125% amazement”.

I’ll look into this...