Here’s a pretty problem with an even prettier solution. Arthur selects an option. That option has a delta pValue; each actor must change their pValue towards Arthur by the amount specified in the delta pValue. However, different actors react with different intensities, depending upon their accordance value. We need a Blend function to calculate the final result. It might look like this:

pBad_Good[Actor, Arthur] = Blend(pBad_Good[Actor, Arthur], deltaPBad_Good, accordBad_Good[Actor]);

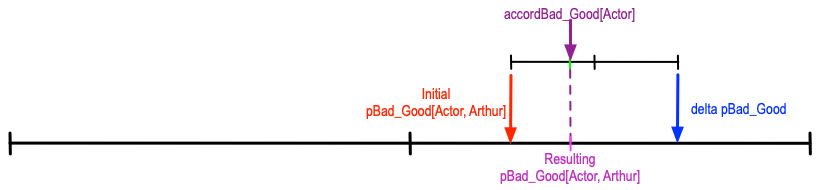

Here’s what this looks like geometrically:

This makes perfect sense, but there’s a problem: every new encounter results in a change that’s just as strong as the first change. The more experience an actor has with Arthur, the less pliable his pValues should be. To put it another way, with increasing experience, an actor’s pValue towards Arthur should become weightier, less amenable to change.

Here’s the pretty way to solve this problem: reduce the accordance value with each change. In other words, after executing the above Blend, we execute a second Blend:

accordBad_Good[Actor] = Blend(accordBad_Good[Actor], -0.99, 0.1);

This has the effect of reducing accordBad_Good each time it is used, which is the same as giving more weight to pBad_Good[Actor, Arthur]. This has one flaw: there’s a big drop-off at first, and a lesser one later. This responsiveness can be made more linear with a subtraction function:

accordBad_Good[Actor] = BAddition(accordBad_Good[Actor], -0.01);

Note that this uses BNumber addition. Note also that the two constants use (0.1 in the upper blend, and 0.01 in the lower Addition) are easily changeable with experimentation.