So here is today’s problem: I have long had good algorithms that automatically identify the main stars in the field of view, but for some reason those algorithms are not working well in the northern sky. The crucial number here is the “Average Position Error”, which measures just how closely the images in the frame line up with the known positions of the stars that have been identified. Now, I have to seed the system with the positions of a couple of dozen known stars. That is, I go through the initial images by hand and identify about 20 or 30 of the brightest stars, measured their positions, and prime the algorithm with those positions. It uses those to get oriented, and after that operates independently. The Average Position Error based on these stars is around 0.04º, which is very good indeed. However, the algorithms are then unable to identify new stars. I stuck a breakpoint in the code to watch a particular star being analyzed. It found the image of the star and attempted to identify it, but somehow ended up with the wrong star. That turned out to be because the coordinate system was misaligned. Tracking that down, I eventually established that the crucial point “V” (representing the point in the sky exactly vertical to the center of the field of view) was not calculated correctly. It is calculated by a least squares fit algorithm that analyzes the differences between the altitudes of the identified stars (by calculating from their Right Ascension and Declination plus the Local Sidereal Time) and their y-values (their vertical positions on the frame). But that algorithm was somehow screwing up. I decided, for the time being to calculate it by hand and enter the result. I did this by plotting altitudes of the various stars, printing out the result, and eyeballing altitude lines, which I then measured the overall angle with a protractor. Here, by the way, is the frame image:

This is presented in inverse -- black is white and white is black; astronomers have long known that inverse is easier to work with. The image is rather cluttered. Each star (a black dot) that I identified by hand is shown by name, frame coordinates, and altitude. Thus, the star almost at the very center of the image is 35 Draconis, at coordinates 362h by 205v, altitude 21º. The green dot shows the exact center of the image, which is NOT coincident with the center of the frame because the image intensifier was not perfectly lined up with the camera. The difference is small, but significant for some calculations. The green lines show my eyeballed isoaltitude lines; drawing a perpendicular through them yields an angle of attack of about 7º.

Sunday, January 9th

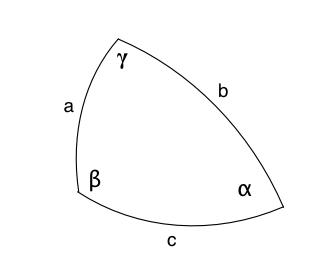

I had been getting rather frustrated with the slow progress I’m making in clearing up these problems, but today the pieces finally started falling together. I sure did make a lot of stupid mistakes: getting signs backwards (plus where it should be minus) and mixing up sines and cosines. Here’s a diagram of the standard spherical trig formula used in all my calculations:

Here, a, b, and c represent the three sides of the spherical triangle and α, β, and γ represent the three internal angles. Remember, these are ALL angles; a spherical triangle lies on the surface of a sphere, so that there are angular distances (like a, b, and c) and surface angles (like α, β, and γ). The basic formula used in almost all the work I do is called the Law of Cosines:

cos a = cos b cos c + sin b sin c cos α

This formula can be used to solve just about any problem I face in spherical trig. Unfortunately, in many of the problems, some of the operators switch from sin to cos and vice versa, because we’re using the complement of the angle. This can all get very confusing when some are switched and some aren’t; you can end up with versions looking like this:

sin a = sin b sin c + cos b cos c cos α

In fact, this is the standard form used to convert from celestial coordinates (right ascension, declination) to observer coordinates (azimuth, altitude) and vice versa. Sometimes you can get mixtures of the two:

sin a = sin b cos c + cos b sin c cos α

Anyway, I got some of these mixed up, which led to no end of trouble. Another problem is very common: trying to get the right values for the right quadrants. Angular relationships in different quadrants are sometimes negated (plus goes to minus, and vice versa) and sometimes you need to add or subtract the number from 180º or 90º. Toss in the fact that Java works in radians rather than degrees, making the debugging a little less common-sensical, and things can get very confusing. At one point, I pulled out my globe and used latitude and longitude to represent declination and right ascension, then put tiny Post-It labels at various locations so that I could visualize exactly what the situation was. In one case, I even taped strings between the various points to show the spherical triangle explicitly.

In my high school and college years, I was able to do all this visualization in my head without any visual aids, but I seem to be going senile, so I need the help. In any case, I eventually got it all working (I think). Here’s the basic spherical trig code that underlies about 80% of the calculations used in the system (sorry for the lack of indentation; I can’t seem to get it working with this website editor):

double vAlt=Math.asin(Math.cos(center.alt)*Math.cos(aZ2C2V));

double aC2Z2V=Math.acos((-Math.tan(vAlt)*Math.tan(center.alt)));

double vAz=aC2Z2V+center.az;

vertical=new SkyPosition(vAz,vAlt,false);

double dV2R=Math.acos(Math.sin(vertical.dec)*Math.sin(radiantDec)+Math.cos(vertical.dec)*Math.cos(radiantDec)*Math.cos(vertical.ra-radiantRA));

double dV2C=Math.acos(Math.sin(vertical.dec)*Math.sin(center.dec)+Math.cos(vertical.dec)*Math.cos(center.dec)*Math.cos(vertical.ra-center.ra));

double aN2V2C=Math.acos((Math.sin(center.dec)-Math.sin(vertical.dec)*Math.cos(dV2C))/(Math.cos(vertical.dec)*Math.sin(dV2C)));

for (int x=0; (x<gridWidth); ++x) {

for (int y=0; (y<gridHeight); ++y) {

double thisX=gridSpacing*x;

double thisY=gridSpacing*y;

double dx=thisX-centerX;

double dy=thisY-centerY;

double dxa=dx/horzRad2Pix;

double dya=dy/vertRad2Pix;

double dC2P=Math.sqrt(dxa*dxa+dya*dya);

double aV2C2P=0;

if ((dxa>=0)&(dya>=0)) aV2C2P=pi/2+Math.atan(dya/dxa);

if ((dxa>=0)&(dya<0)) aV2C2P=pi/2+Math.atan(dya/dxa);

if ((dxa<0)&(dya>=0)) aV2C2P=pi+Math.atan(dya/dxa);

if ((dxa<0)&(dya<0)) aV2C2P=-Math.atan(-dya/dxa);

double dV2P=Math.acos(Math.sin(dC2P)*Math.cos(aV2C2P));

double aP2V2C=Math.acos(Math.cos(dC2P)/Math.sin(dV2P));

double aP2V2N=0;

if (dxa>0) aP2V2N=aN2V2C+aP2V2C;

else aP2V2N=aN2V2C-aP2V2C;

double pDec=Math.asin(Math.cos(dV2P)*Math.sin(vertical.dec)+Math.sin(dV2P)*Math.cos(vertical.dec)*Math.cos(aP2V2N));

double deltaRA=Math.acos((Math.cos(dV2P)-Math.sin(vertical.dec)*Math.sin(pDec))/(Math.cos(vertical.dec)*Math.cos(pDec)));

// Potential source of trouble here:

// The formula for angle deltaRA is ambiguous, yielding two solutions

// The default solution is not correct for the right side of the aircraft.

// Since I can’t easily figure the differentiating criterion, I am for

// the moment hard-coding it in the next line:

deltaRA=2*pi-deltaRA;

double pRA=vertical.ra+deltaRA;

double dP2R=Math.acos(Math.sin(pDec)*Math.sin(radiantDec)+Math.cos(pDec)*Math.cos(radiantDec)*Math.cos(pRA-radiantRA));

double theta=Math.acos((Math.cos(dV2R)-Math.cos(dV2P)*Math.cos(dP2R))/(Math.sin(dV2P)*Math.sin(dP2R)));

tRA[x][y]=pRA;

tDec[x][y]=pDec;

double pAlt=Math.asin(Math.sin(pDec)*Math.sin(latitude)+Math.cos(pDec)*Math.cos(latitude)*Math.cos(pRA-LST));

tAltitude[x][y]=pAlt;

tAzimuth[x][y]=Math.acos((Math.sin(pDec)-Math.sin(latitude)*Math.sin(pAlt))/(Math.cos(latitude)*Math.cos(pAlt)));

tRange[x][y]=entryHeight/Math.sin(pAlt);

double leonidAngularVelocity=(Math.sin(dP2R)*linearVelocity/tRange[x][y])/30; // 30 fps

if (leonidAngularVelocity<0.0004)

System.out.println("abnormally low Leonid Angular Velocity: "+leonidAngularVelocity);

tDX[x][y]=-horzRad2Pix*leonidAngularVelocity*Math.sin(pi-theta); // northern version

tDY[x][y]=-vertRad2Pix*leonidAngularVelocity*Math.cos(pi-theta);

}

}

Ain’t that purty?